The signals beneath the surface

Multi Asset Boutique

Introduction

So far in our Expl(AI)ning series we have introduced artificial intelligence (AI), we have seen where its adoption stands in asset management and have given an intuition for how it can be used to deliver better outcomes. The missing piece in the puzzle is to demonstrate how we explain. Let us elaborate. When applying machine learning (ML) and AI in the investment process, one recurring challenge is the lack of transparency in how decisions are made using models. ML models are often referred to as black boxes, producing predictions or forecasts without revealing the reason behind them.

Many machine learning algorithms lack inherent interpretability and only a few offer transparency by design. When using the former set of techniques, researchers can use model-agnostic explanation techniques, methods that can interpret any model, regardless of its internal structure. We mainly deploy Gradient Boosted Tree based algorithms which are not inherently explainable. Among the model-agnostic explanation methods, SHAP values have emerged as a particularly useful tool for explaining the outputs of complex models that lack built-in interpretability characteristics. In this piece, we introduce SHAP values, how we use them, and show two examples of explanations taken directly from the results of an AI Powered stock picker model .

What are SHAP values?

SHAP values, short for SHapley Additive exPlanations, are a way to understand how each feature in a dataset contributes to a model’s prediction. Before diving deeper, it helps to bring back the definition of these terms:

- A feature is simply an input variable the model uses to make decisions: for example, in financial data, features could include a company’s profit margin, debt ratio, or revenue growth.

- A model prediction is the model’s output: the value it estimates based on those inputs. In finance, that might be a predicted credit score, probability of default, or expected return.

So, when we say SHAP values explain how each feature contributes to a prediction, what we mean is that they tell us how much each input has pushed the model’s output higher or lower for a specific case. The concept of SHAP values originates from Shapley values in game theory where the goal is to fairly divide a total “payout” among players who worked together to achieve an outcome. In machine learning, you can think of a prediction as the “payout” and each feature (like P/E ratio, revenue growth, or debt level) as a “player” that contributes to that prediction. SHAP values tell us how much each feature helped (or hurt) the model’s final decision.

The role of SHAP values in our AI Powered investment process

To understand how we use SHAP values we need to do a quick recap of how we use AI in the first place, starting with the three core ingredients of any AI system: data, learning architecture, and objective. As data scientists and engineers we focus on finding the right mix of these three to succeed at solving a problem through machine learning. In our case:

- Among other things we rely on fundamental data as input, that is numerical data coming from official balance sheets and reports.

- We use a Gradient Boosted Tree approach as architecture as it is a robust solution for sparse numerical data.

- We predict outperformance potential for each stock.

As we have already discussed in a previous piece (Architecture and data trump maths), in our view the best way to tackle a problem using machine learning can be boiled down to a simple concept: let the data speak. We do not impose specific structure to the data nor the model. Hence, having a way to inspect how the model is operating is important to understand what characteristics in the data are driving the decisions. We start by focusing on explaining the impacts of individual input features on single predictions and then expand the approach to aggregate features and stocks into more commonly recognized groups (respectively factors and sectors or countries) to give a higher-level view of the model’s positioning.

Single line SHAP: the AI’s view on single stocks

In terms of interpreting the model’s view on single stocks, SHAP values are a powerful tool that can be used to answer why the model made a specific prediction. This local perspective is particularly useful in investment research, where each company’s fundamental data represents a different story. For instance, by decomposing the model’s prediction for a single stock, we can see how features such as profitability, leverage, or valuation metrics contribute positively or negatively to its final prediction score. Our AI model utilizes over 200 features across fundamental, market and other data sources. When charting all SHAP values the result might be overly complex. For this reason, by grouping features and by leveraging the additive property of SHAP values we compute aggregate SHAP values. In Figure 1 we show these grouped SHAP values that explain the latest prediction of the model for one of the largest Swiss public companies: Roche Holding AG. From this analysis we see that the features that represent the growth of the business have the most impact on the last prediction at the end of October 2025. The numbers in the chart represent the actual SHAP values. The total is the total contribution of the features, that is how much the prediction is moving away from the average of all predictions. In this case we have rescaled the average to zero to make the chart more readable.

Comparing how the explanation for a company’s predictions changed over time adds further insight — revealing, for example, changes in the fundamental data or changes in the relative positioning with respect to other companies that can be further analyzed in detail. These analyses help translate model outputs into intuitive, financially relevant explanations that may align with or differ from the reasoning of traditional analysis. In the growth pillar we consider features such as change in sales, revenues, net income and more – compared to peers and the whole market . For instance, in Figure 2 we can see that for Roche Holding AG the contribution from the growth related features drastically changed around the end of July, 2025. Notably, the company reported earnings on July 24th, marking an improvement in business growth. The model scores the new fundamental data and adjusts the company’s prediction. Through SHAP analysis, we were able to interpret what changes in the underlying data most affected the change in prediction score for Roche Holdings AG.

Aggregated SHAP: The AI’s view on a sector

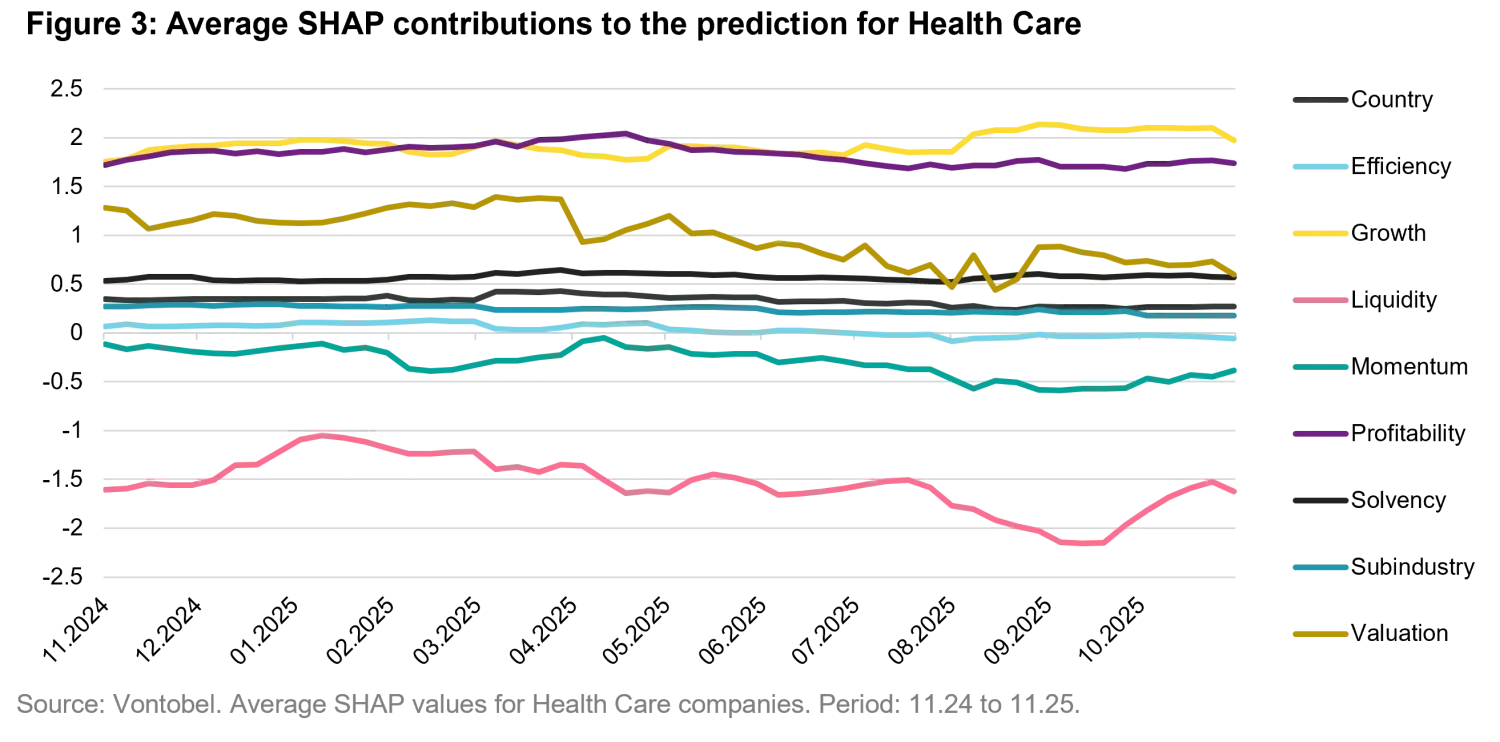

While single-stock SHAP values explain individual predictions, collective SHAP analyses reveal broader patterns covering entire sectors or the whole market. By aggregating SHAP values across many stocks, one can identify which groups of underlying financial features and values deliver positive or negative signals. For example, across the information technology sector, the model might attribute higher scores to firms with strong revenue growth and high margins, while in utilities, revenue stability and low leverage may score more positively. In Figure 3 we see that the growth pillar is driving predictions for the Heath Care sector over the whole global investable universe of the MSCI All Country World index. During the past year this was not always the case. In fact, we can also see that profit-related features were prevalent in the first half of the year with valuation features also losing ground.

Aggregating SHAP results at the sector or market level helps uncover the model’s implicit themes highlighting which fundamental features and values are considered favorable in different contexts and how this aligns with or diverges from traditional investment intuition and expectations in market behavior.

Conclusion

Machine learning models are not necessarily black boxes. As we have seen, methods such as SHAP values enable us to derive interpretable explanations for model decisions. These capabilities provide insights into the circumstances behind the predictions and helps us monitor that models behave as intended.

With this article, we conclude the Explaining series, which began over a year ago with an introduction to the fundamentals of AI and machine learning. We hope this series has provided useful insights and practical guidance for understanding and applying explainability techniques within quantitative asset management and that it supports you in building more transparent, trustworthy, and effective data-driven investment strategies.

Sources:

- Vontobel analysis.

- Roche Half Year results presentation https://assets.roche.com/f/176343/x/184f41bf07/irp250724-a.pdf

- SHAP Docs https://shap.readthedocs.io/en/latest/

- Christoph Molnar, Interpretable ML https://christophm.github.io/interpretable-ml-book/

Disclaimers: The content is created by a company within the Vontobel Group (“Vontobel”) for institutional clients and is intended for informational and educational purposes only. Views expressed herein are those of the authors and may or may not be shared across Vontobel. Content should not be deemed or relied upon for investment, accounting, legal or tax advice. References to holdings and/or other companies for illustrative purposes only and should not be considered a recommendation to buy, hold, or sell any security discussed herein. Diversification does not ensure a profit or guarantee against loss. While the information herein was obtained from or based upon sources believed by the author(s) to be reliable, Vontobel makes no express or implied representations about the accuracy or completeness of this information, and the reader assumes any risks associated with relying on this information for any purpose. Vontobel neither endorses nor is endorsed by any mentioned sources.

This material may contain forward-looking statements, which are subject to uncertainty and contingencies outside of Vontobel's control. Recipients should not place undue reliance upon these forward-looking statements. There is no guarantee that Vontobel will meet its stated goals. Past performance is not indicative of future results. Investment involves risk, losses may be made.

The terms "AI System" and “Our AI" as used herein stand generically for a theoretical view of AI by Vontobel, and are used to illustrate how Vontobel approaches the topics discussed. The methods and models as described do not refer to any strategy offered.

MSCI Inc’s data and analytics were used in preparation of this information for illustrative purposes only. Copyright 2025 MSCI, Inc. All rights reserved.