Quant 2.0 - The AI Revolution

Quantitative investing is undergoing one of the most profound transformations since its inception. What began as a discipline grounded in financial theory, simple datasets and structured rules has entered a new phase shaped by artificial intelligence. Classical quant models helped investors navigate markets with discipline and scale, but today’s environment demands tools that can process richer data, capture more complex patterns and adapt more quickly to changing conditions. This article looks at where quant came from, how AI is reshaping it, and what the next chapter may bring.

Origins of Quant

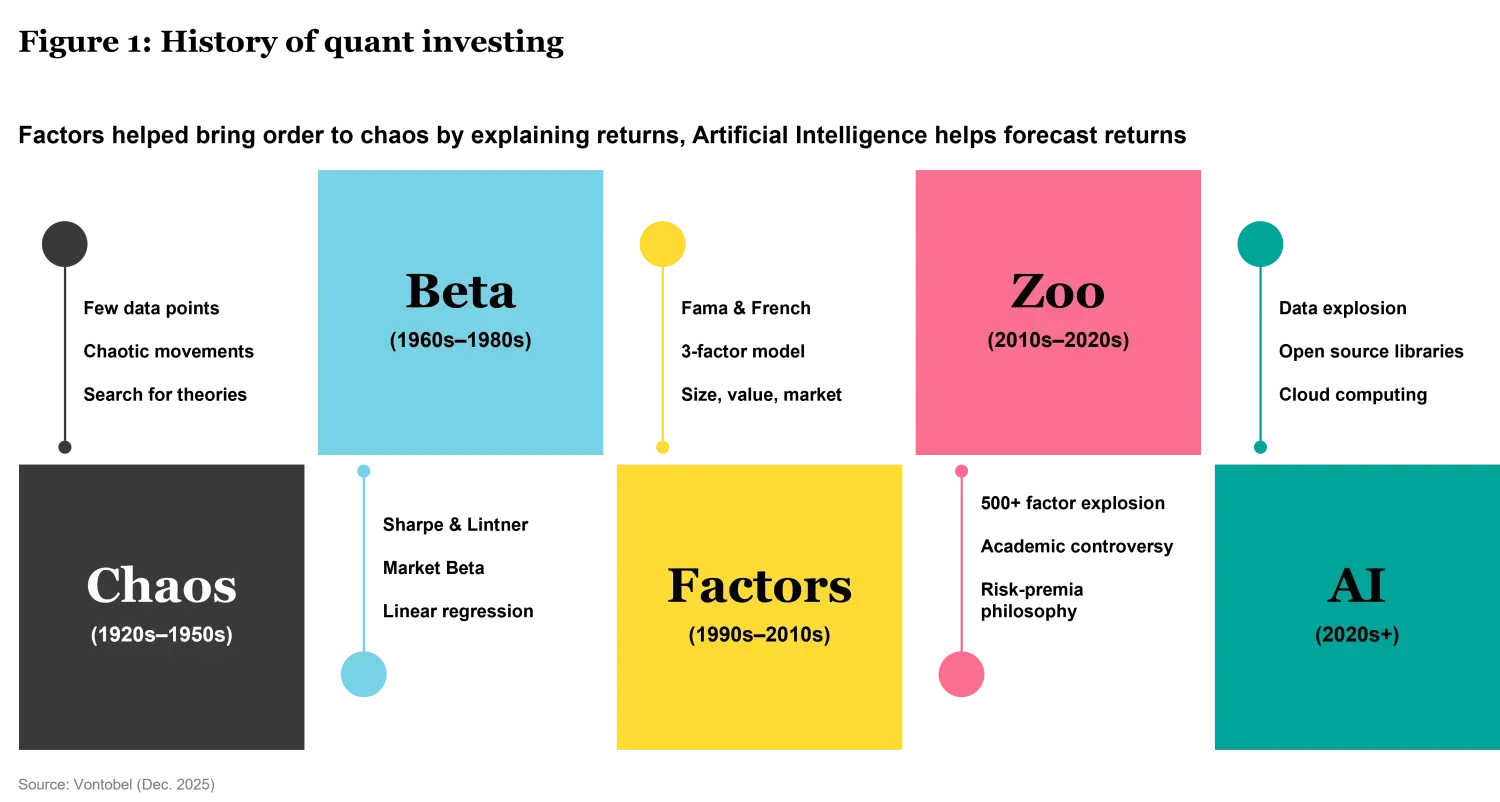

Quantitative investing began with a profoundly simple idea: markets may be noisy in the short term, but they are not entirely random. As early as the 1950s and 1960s, researchers in mathematical finance began transforming intuition about risk, return and diversification into formal models. Portfolio theory, market risk, and early studies on valuation, size and momentum laid the foundations of what later became known as “quant investing.” In figure 1 we highlighted some of the main milestones in the history of quant investing.

By the 1980s and 1990s, with more computing power and more structured datasets, investors could systematically test these ideas. Backtesting allowed teams to sift through decades of market history to determine whether certain patterns such as cheap stocks outperforming expensive stocks, companies with improving fundamentals delivering excess returns, or winners continuing to win were persistent and robust. Quantitative investing matured into a discipline defined by rules, repeatability and discipline.

Classical quant systems offered two major advantages. First, they enforced process over emotion: buy when the rule says buy, sell when the rule says sell. Second, they scaled efficiently. Once the model was defined, it could be applied consistently across hundreds or thousands of stocks. Factor investing, risk budgeting, and systematic alpha strategies emerged as a powerful way to manage global portfolios.

However, these early approaches also had limitations. Most classical quant models assume linearity, meaning that relationships between factors and returns are stable, smooth and additive. Reality is rarely so neat. Markets go through regime changes, where patterns that once dominated suddenly weaken or reverse. Classical models were also rigid: once a factor definition was set (e.g. “value equals book-to-price”), it required human intervention and long research cycles to adjust. And because they relied on a relatively small set of hand-crafted variables, they struggled to exploit the explosion of new data sources from analyst text to alternative datasets that now arrive every millisecond.

By the mid-2010s, many managers faced the same challenge: traditional quant still worked, but the edge was thinner. Markets had become more efficient, datasets broader, relationships more intertwined. A more flexible, more adaptive approach was needed. That set the stage for the next era.

The New Era of Quant 2.0 with AI

The past decade ushered in a transformation: the rise of machine learning in investment management. At its core, AI excels at detecting non-linear relationships, interactions between variables, and subtle patterns embedded in very large datasets. These are all areas where classical quant models often struggle. Instead of assuming the world behaves according to a pre-defined equation, machine learning learns the equation directly from the data.

Our Quant boutique has embraced this shift by building a dedicated Equities team focused on the systematic management of global and regional portfolios using machine learning. The team comes from deeply quantitative and technical backgrounds in data science, computer science and engineering. Just as the DeepMind researchers were not biologists yet revolutionized protein folding, our team are not traditional “stock pickers”. Our edge comes from understanding learning systems, model behavior, and how to engineer signals from high-dimensional data rather than relying on subjective forecasting.

Our proprietary developed AI system evaluates thousands of global stocks through a large ensemble of models. Instead of manually defining what “quality” or “momentum” means, the system automatically identifies which features matter most in the current environment and how they interact. Selection and weighting happen within a unified optimization process, the AI system first generates forecasts for the entire global universe. These forecasts are then fed into a portfolio optimizer, which translates the signals into final weights while balancing diversification, sector and country exposures, and other portfolio constraints. In practice, stocks with stronger model signals tend to receive larger allocations within this systematic framework.

Of course, flexibility and raw predictive power are not enough. AI models must be trusted, understood and controlled. That is why our framework uses Shapley values to provide transparency: they show why the model prefers a particular stock—was it valuation? earnings revisions? financial momentum? balance sheet strength? This explainability gives the human portfolio managers the ability to validate model behavior rather than simply accept it.

We also operate within a set of guardrails. To reduce the risk of overfitting, we rely on cross-validation, regularization techniques and sensible limits on model complexity—but none of these methods offer absolute guarantees. Therefore, continuous monitoring is essential to detect when relationships start to weaken or behave differently. Model drift is tracked over time so that the team can intervene when patterns change. Human oversight remains essential: the team evaluates unexpected signals, such as those caused by missing data or outliers, and ensures the system adheres to investment and risk standards.

This fusion of advanced machine learning, rigorous process and transparent oversight defines what we call Quant 2.0. It is already reshaping how portfolios are researched, built and managed.

The Future of Quant

If classical quant was about defining rules and AI-powered quant is about learning rules, then we expect the next era , Quant 3.0, will be about adaptive intelligence across the entire investment lifecycle.

In Quant 3.0, models will go beyond today’s adaptive capabilities, learning not only from historical patterns but also from evolving market behavior in real time. Future systems may identify emerging regime shifts earlier and respond more fluidly, adjusting exposures before the market consensus forms. Research pipelines could also become increasingly autonomous, able to generate hypotheses, test them, validate results and integrate improvements into portfolios with far less manual intervention than today.

We also expect further advances in multimodal data: combining fundamentals, prices, real-time news flow, satellite imagery, credit card transactions, supply-chain data and more into a single predictive framework. Large language models (LLMs) will play a much bigger role in interpreting narrative information such as analyst calls, management transcripts, regulatory filings, giving quant teams a deeper understanding of qualitative drivers than ever before.

Explainability will evolve as well. Today we use Shapley values; tomorrow’s techniques may provide dynamic causal explanations, showing how expected returns change when hypothetical scenarios unfold. Rather than simply understanding model predictions, investors may gain a clearer view of the model’s broader reasoning framework—how it connects information, forms expectations and adjusts as conditions change.

The role of humans will change but remain essential. Researchers may increasingly act as “AI supervisors,” defining strategic objectives and the guardrails within which models operate, while automated systems perform large-scale hypothesis testing and exploration. This means less effort devoted to manual, time-consuming research steps and more capacity for humans to focus on conceptual thinking, model design, and economic interpretation.

Ultimately, Quant 3.0 will not be defined by technology alone but by how intelligently humans and machines collaborate. The ambition is clear: more adaptive portfolios, better-timed decisions, and deeper insights drawn from complexity that once felt unmanageable.

We are still in the early chapters of this story. Classical quant unlocked disciplined investing. Quant 2.0 has introduced learning systems that update those rules in real time. We expect the next era will bring even more powerful, intelligent and transparent tools that help investors navigate global equities with speed, scale and scientific rigor.

References:

Markowitz (1952). Portfolio Selection. https://doi.org/10.2307/2975974

https://www.marketwatch.com/story/the-investment-debt-you-owe-sam-eisenstadt-2020-09-08

Sharpe (1964). CAPITAL ASSET PRICES: A THEORY OF MARKET EQUILIBRIUM UNDER CONDITIONS OF RISK http://dx.doi.org/10.1111/j.1540-6261.1964.tb02865.x

Graham, Dodd (1934). Security Analysis

Banz (1981). The relationship between return and market value of common stocks. https://doi.org/10.1016/0304-405X(81)90018-0

Fama, French (1992). The Cross-Section of Expected Stock Returns. https://doi.org/10.2307/2329112

Jegadeesh, Titman (1993). Returns to buying winners and selling losers: Implications for stock market efficiency. https://doi.org/10.1111/j.1540-6261.1993.tb04702.x

Fama, French (2012). Size, value, and momentum in international stock returns. https://doi.org/10.1016/j.jfineco.2012.05.011

Important Information: The content is created by a company within the Vontobel Group (“Vontobel”) for institutional clients and is intended for informational and educational purposes only. Views expressed herein are those of the authors and may or may not be shared across Vontobel. Content should not be deemed or relied upon for investment, accounting, legal or tax advice. Past performance is not a reliable indicator of current or future performance. Investing involves risk, including possible loss of principal. Vontobel makes no express or implied representations about the accuracy or completeness of this information, and the reader assumes any risks associated with relying on this information for any purpose. Vontobel neither endorses nor is endorsed by any mentioned sources. Quantitative inputs and models use historical company, economic and/or industry data to evaluate prospective investments or to generate forecasts. These inputs could result in incorrect assessments of the specific portfolio characteristics or in ineffective adjustments to the portfolio’s exposures. As market dynamics shift over time, a previously successful input or model may become outdated and result in losses. Inputs or models may be flawed or not work as anticipated and may cause the portfolio to underperform other portfolios with similar objectives and strategies. he AI models deployed are predictive by design, which inherently involves certain risks. There are potential risks associated with AI tools as these tools, being relatively new, may harbor undetected errors or security vulnerabilities that may only surface after extensive use. The AI investment strategies are proprietary, as such, a client may not be able to fully determine or investigate the details of such methods or whether they are being followed.