Firecracker or eternal flame – which companies will benefit from AI?

Quality Growth Boutique

Artificial intelligence has been a part of our lives for years – whether it’s searching on Google, chatting with Alexa, or watching content on Instagram. The past decade in particular has seen innovations in computing, storage, and availability of data accelerate innovation in what is known as machine learning.

Why has this particular AI cycle captured so much attention? Generative AI represents a new class of AI that is characterized by its ability to better understand language as well as create original content. Generative AI can create text, images, and audio that appears human-like without relying on explicit programming. ChatGPT was the first version of generative AI to achieve broader popularity, driven by its ability to interact with humans in a more conversational way.

While most industries will likely incorporate generative AI, we don’t think it will necessarily fundamentally change the nature of their business. Much of the impact will probably be on the efficiency side, and a company’s ability to actually retain productivity gains achieved through generative AI will depend on competitive intensity; in competitive industries the benefits from AI will be competed away. Material impacts are likelier to occur within the technology sector, where generative AI intersects more directly with a company’s product offerings. Conceptually, generative AI will further boost demand for IT infrastructure that is already in demand for deep learning.

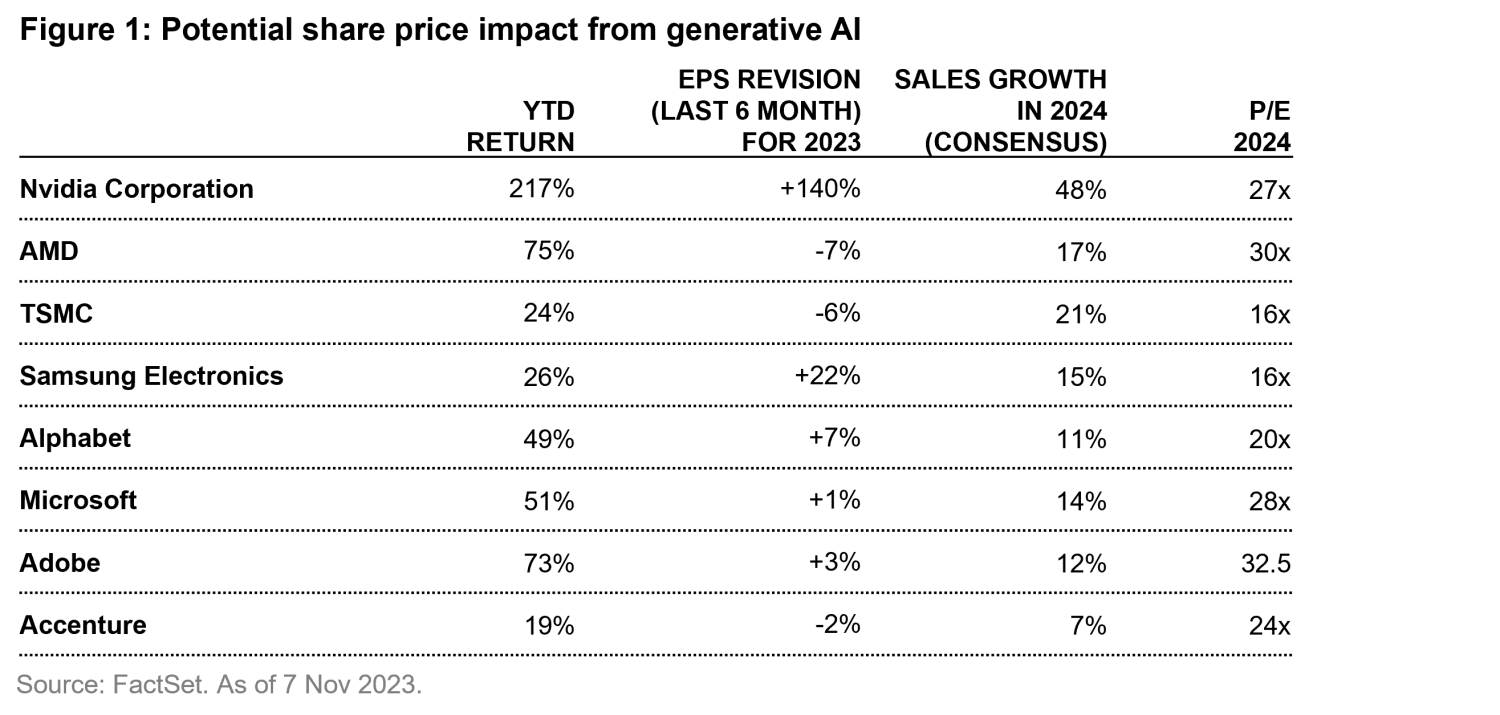

AI has been an important driver of equity returns in the tech sector this year. Investors have focused on the companies with the greatest exposure to AI (i.e., higher adoption of accelerated computing) as well as the speculative upside. While this has its logic, quantifying the medium-term AI impact at this early stage is inherently less predictable, and fluctuations in outcomes can have a meaningful impact on expectations for the overall company. By failing to distinguish the level of certainty around the long-term benefits of AI, markets have disproportionally rewarded some companies over others. For example, the sharp rise in NVIDIA’s and AMD’s stock price this year predicates that investors believe they will benefit from increased AI adoption. However, this would not be possible without the help of TSMC, the cornerstone manufacturer of sophisticated semiconductor chips that power AI not only for NVIDIA, but also for tech giants such as Google and Microsoft.

When assessing the impact of generative AI, we focus on readily addressable use cases versus dreaming about the long-term possibilities. Generative AI is still in its early stages of development and adoption and certain types of tasks are more immediately addressable given the strength of large language models (LLMs)1 in assisting with writing, summarization, content creation, etc. At the same time, current iterations of LLMs are not “intelligent” in the conventional sense and act more as incredibly intricate pattern matching machines, which makes them prone to mistakes, such as hallucinations. These limitations make LLMs better suited to more narrowly defined tasks where data and training can drive greater consistency. This also lends itself to business facing use cases, particularly as businesses are willing to pay for efficiency gains. Several of our technology holdings have incorporated new generative AI capabilities into their existing product portfolios, which allows them to leverage their existing strengths (e.g., traditional capabilities, distribution) to drive more sustainable earnings growth.

Enthusiasm for companies that can benefit from generative AI should be coupled with strict valuation discipline. The estimated AI-driven earnings boost should occur over the next few years. However, the eventual share price impact will depend on companies’ ability to use AI to enhance earnings. As investors, we favor firms that exhibit higher predictability and are positioned to benefit from the structural growth within this sector, ensuring more realistic and measurable financial returns.

In this article, we highlight six companies – Microsoft, Google, Adobe, TSMC, Samsung Electronics, and Accenture – that we believe are poised for measured, long-term earnings growth from generative AI, and where we have considered a variety of risks.

Microsoft is viewed as one of the biggest software winners from AI

Investors view Microsoft as having multiple areas of upside without significant perceived vulnerabilities. Microsoft’s strategy is to package AI features as separate premium priced AI SKUs, which have been branded Copilot and emphasize the assistive nature of the technology.

GitHub Copilot (assistive coding) generates code, and is also helpful for debugging and explaining code, code documentation, and code translation. GitHub Copilot is an autocomplete tool that speeds up the initial “first draft,” though this gets partially offset by greater debugging and re-writing. While the suggested code can still have issues around context, efficiency, and security, the value proposition seems clear given the significant productivity benefit, particularly for scarce and well compensated software developers.

Office Copilot represents the biggest potential AI lever, with features such as drafting or editing a memo, responding to an email, creating a PowerPoint presentation based on existing files, or using Excel to analyze data and create charts. Office Copilot should also act as a natural language-based interface that allows users to take advantage of Office’s full capabilities.

Copilot’s penetration may be more modest at first, as it will take time for companies to figure out how AI driven tasks drive efficiency across a broader white-collar workforce and how much of that actually accrues to the company. It will only be available to users who are part of the broader M365 suite, which represents approximately 160 million out of the overall base of 382 million Office365 seats.

Among its other applications, Microsoft has announced a Dynamics Copilot, with AI features spanning sales (customizable email content, meeting summaries), customer service (fixing customer issues, virtual agents), marketing (customer data insights, audience segments, copywriting), and supply chain management. The Microsoft Security Copilot is designed to help detect, investigate, and document incidents.

We expect AI to also drive demand for Microsoft’s Azure public cloud. Customers can get access to Open AI’s foundational models (GPT, Codex, DALL-E) as well as APIs to run specific tasks (e.g., image recognition, content safety, speech translation). In addition to paying for Microsoft’s proprietary models, customers can simply use Azure’s IT infrastructure to run open source models or their own models.

Estimating the revenue impact on Azure is more difficult given more unknowns. While usage of AI models will ramp up, the cost of inference is declining rapidly as companies are finding efficiencies. In terms of market share, it is unclear what portion of tasks will be conducted using leading edge models vs. open source models that are trained for a specific domain and are “good enough”. Also, each model can come in various sizes depending on the performance requirements. That said, Azure could generate $6 billion in incremental AI revenues in a few years, compared to $57 billion Azure revenue base in FY23, or closer to $43 billion excluding the SaaS portion of Azure.

AI revenues for Microsoft could add up to approximately $20 billion2, compared to $212 billion in total revenues in FY23. This would represent an incremental 9% of revenues today, though the initial pace of adoption could be gradual as customers familiarize themselves with a new type of product. Assuming 4-5 years to ramp up, would contribute approximately 2% to revenue growth and approximately 3% to EPS growth over the period.

Google is a beneficiary of integrating AI into its franchises

The initial introduction of ChatGPT raised questions about the implications for Google, given the service’s seeming ability to answer questions about any topic. In addition, Microsoft unveiled a revamped Bing centered around GPT-4. However, despite a very high-profile launch early in the year, the new Bing has failed to make a discernable dent in Google’s results. Since the beginning of the year, Google’s global market share has remained stable at 92-93% vs. Bing at around 3%.

Google is a leader in deep learning and LLMs and, while initially cautious, was ultimately quick to incorporate generative AI type features across its products. Its search generative experience (SGE) embeds AI generated snapshots, a conversational mode within search, and suggested follow up questions. Competitive concerns around search have waned, although there are still some questions about the near-term implication of greater AI adoption on monetization and costs. Ultimately, AI appears expansionary to search given the potential for additional use cases traditionally not part of search.

For Google’s other franchises, YouTube is an indirect beneficiary of generative AI. YouTube had long used AI for content recommendation, content moderation, and ad targeting, and these efforts will continue to improve. The main impact from generative AI will come from the democratization of producing high quality media content, which will improve the quality of YouTube’s long-tailed content library. Generative AI will also make it easier for advertisers to produce higher quality and more customized creative copy. The impact is difficult to calculate but helps increase confidence in the length of the runway.

Google Cloud will likely benefit in similar ways as Microsoft. Google is working on Duet (its version of Copilot) which will supplement Workspace (its version of Office). Vertex AI is a platform on Google Cloud where customers can build, train, and run AI models, including access to Google’s foundational LLM models. The impact is difficult to estimate but will be limited as Cloud currently only represents approximately 11% of total company revenues. Google Cloud had already been positioned more around big data analytics use cases and AI should help at the margin.

AI is helping Adobe’s customers become more reliant on its solutions

Adobe has been investing in AI and machine learning for a while now, with the 2016 introduction of Sensei, its AI tool for digital marketing as a prime example of the fruits of its labor. By last year, the majority of Adobe’s customers were using Sensei to speed up and simplify workflow. Customer profiles, past marketing campaigns, sales data, etc. all integrate to suggest marketing spend mix or differentiated marketing experiences to optimize decision making. And as the fuel for any AI ambition, data is aplenty at Adobe, with its leading position in the various solutions an advantage that differentiates the value add.

This self-fulfilling cycle of having the lead could help sustain the momentum, especially as marketing becomes more personalized, and marketing solutions and platforms require more data, more integrations, and more AI. The same trends exist in Adobe’s other segments, with AI powering the editing, sharing, signing, etc. capabilities for Adobe’s document cloud users.

Last but not least, the recent excitement around generative AI is more evident in Adobe’s digital content creation business. While advanced photo editing capabilities have always been table stakes, Adobe’s new Firefly feature that generates images based on text is quickly becoming a core capability. Adobe has always been more purposeful on its AI development, and all features undergo a review process for broad implications, including ethics related ones. And unlike some other text to image applications, Firefly is not trained on ‘everything’ and is careful about the commercial applications. Firefly starts with owned images from Adobe Stock and adds in openly licensed plus public content only where copyrights have expired. This can be expanded with customers’ own creative content. While there are certainly idea generation benefits from both commercially usable and unusable data, Adobe’s goal is to empower users with commercially usable content with user feedback has indicated as much. And this priority on user experience is what’s driving the discussion on the scale and speed of monetization. Adobe is currently focused on increasing the number of users and expanding the ecosystem. Whether helping with idea generation or delivering more content, AI is helping users become more productive and ultimately more reliant on Adobe solutions.

Semiconductor components – GPUs, memory chips, and foundries – are critical to the AI technology win

The cost of AI servers is around 15x-32x higher than that of regular servers at $7k, driven mainly by silicon value increase and higher hardware specs requirement. Semiconductors comprise 90% of AI servers’ hardware cost (compared to 65% of regular servers), driven mainly by high-end CPUs, incremental GPUs, and rising requirement for memory chips.

Semiconductors are largely agnostic to which company benefits most from AI. More specifically, we believe investors have been less focused on foundry and memory players because they are mostly based in emerging markets where there is heightened geopolitical risk and concerns around the shape of the semiconductor cycle.

TSMC: a key foundry provider for generative AI and high-performance computing

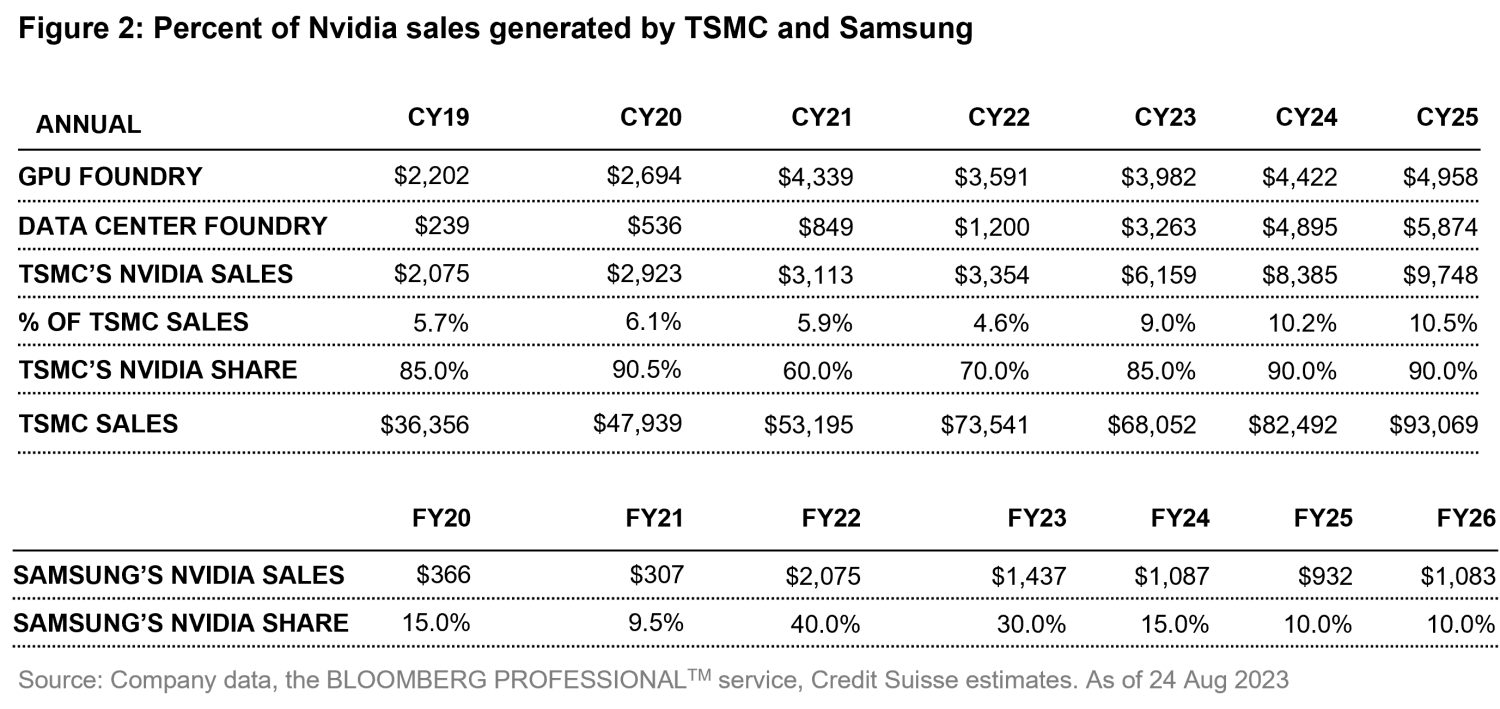

Taiwan Semiconductor Manufacturing Company’s (TSMC) role as a versatile chipmaker underpins its potential for steady, industry-wide gains, independent of any single company's success in AI, whether it is Google, Microsoft or another company. Regardless of which AI chip architechture dominates the market (custom made ASICs or general purpose GPUs), TSMC will be the indispensable enabler of high performance computing solutions.

TSMC’s robust business model not only insulates it from the risks associated with the success of any particular AI company's products, but also positions it to reap quantifiable and scalable gains as the demand for AI-capable chips surges.

While definitions vary, the tech supply-chain generally defines AI servers as those equipped with GPUs. TSMC is the sole foundry supplier of Nvidia’s data center grade GPUs and Advanced Micro Devices given difficulties and high costs associated with switching to other foundries. Lastly, TSMC can also benefit from the rising adoption of cheaper inference solutions, which could drive higher unit volumes and hence help drive higher leading-edge wafer consumption.

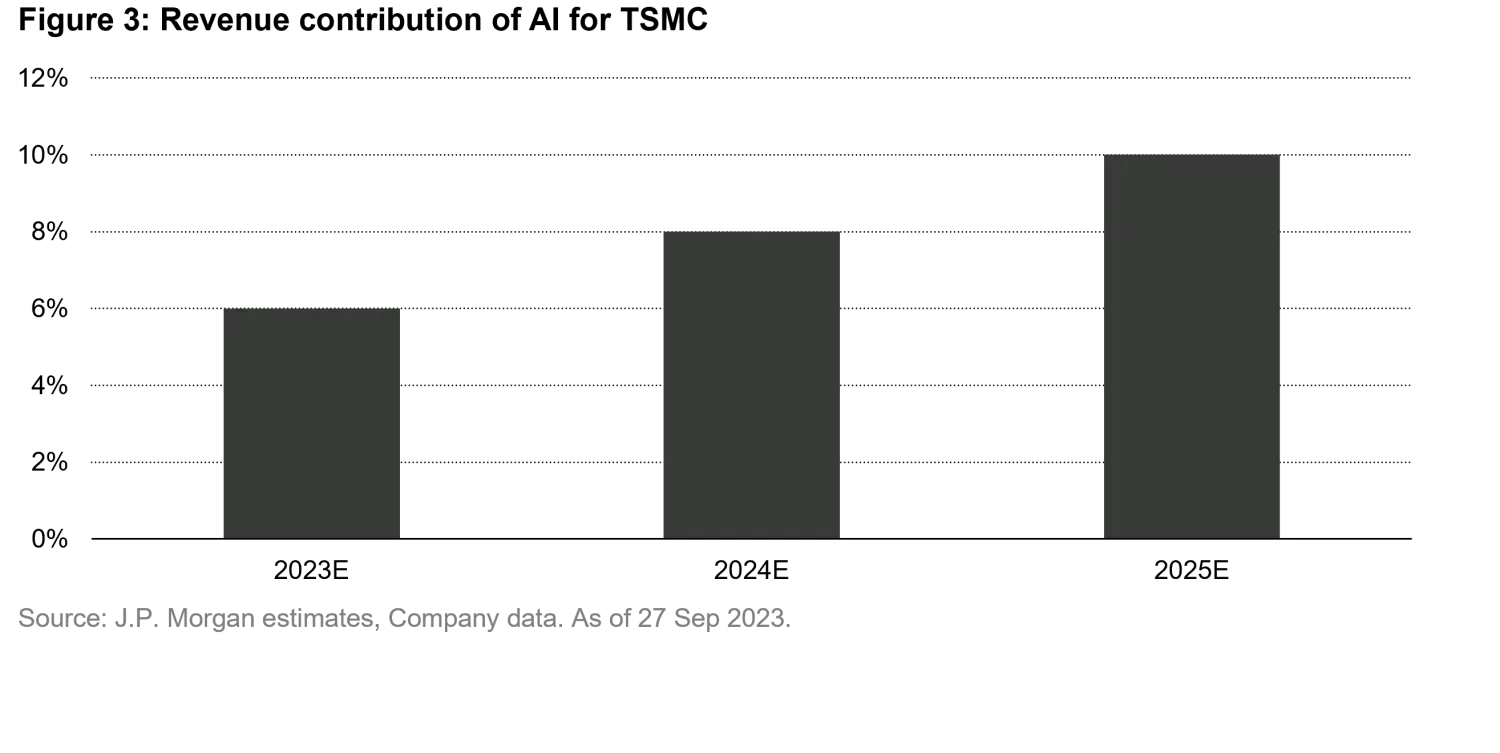

TSMC’s AI-related revenue exposure is 6% and is expected to grow by 50% compound annual growth rate (CAGR) in the next five years, reaching mid-teens revenues. TSMC’s chairman indicated the company can currently only fulfill 80% of its clients’ advanced packaging solutions (CoWoS) demand for AI chips. He expects to provide sufficient supply in 1.5 years. TSMC remains the enabler for datacenter AI solutions thanks to its process tech, CoWoS, good yield in large chips and strong design ecosystem (and should have 90+% share in AI accelerators).

Samsung Electronics: a one-stop solution for AI server customers

An AI server is expected to drive 10-fold higher memory content Dynamic Random Access Memory (DRAM) thanks to high bandwidth memory (HBM), a key component needed to run AI training processors with lower latency and less power. AI models are only as good as the data that DRAM feeds into processors since accelerated computing requires tight integration of GPUs/ASICs with high bandwidth memory (HBM)3. DRAM is one of the highest value components with structural growth in AI. While server DRAM demand shipment was expected to grow by mid-to-high-teens CAGR, the emergence of AI has led to a 10% ppt increase in the CAGR, generating 3-4% additional total DRAM demand.

For Samsung, HBM memory is expected to account for 10%+ of DRAM sales in 2024, compared to mid-to high-single digits in 1H 2023. Samsung and Sk hynix have guided AI server demand to grow by 35-40% over the next five years with HBM market growth at more than 60% during the same period4. As such, Samsung expects memory demand for AI accelerators to reach 11% of total DRAM demand by 2028. Samsung offers a one-stop solution for AI server customers since it has the capability to manufacture the necessary components for AI server chips including GPU (foundry), HBM (DRAM memory), and advanced packaging.

Accenture has built a robust base in AI skills and solutions

Accenture foundation in AI has materialized through both self-driven growth and strategic acquisitions. It possesses solid foundational strengths in consulting, solution design and custom application development, along with deep knowledge of industry-specific and business process domains. Business consulting, known for its personalized approach and not being heavily reliant on coding, is less prone to becoming a generic, commoditized service. Lastly, Accenture has deployed AI-driven IT automation platform within its service delivery and operational models.

The company is expected to invest $3 billion in AI over the next three years, with plans to double its AI workforce to 80K, expecting faster adoption than cloud. Accenture noted the complexity of implementing AI at scale, especially generative AI, given less than 10% of clients (per ACN estimates) have the appropriate tech stack in place to take advantage of the technology.

This year Accenture generated approximately $300 million worth of generative AI projects. These engagements remain very small and exploratory (on approximately $64 billion 2023 sales), but this early experience on the topic strengthens the company’s value proposition for each incremental project, whether it’s incremental cloud migration or data storage optimization.

Understanding Risks

When assessing AI computing, risks include lesser-than-expected adoption of AI, smaller model sizes, smaller training data, and optimized model and algorithm design may reduce the computational requirements. The key barriers to adoption are concerns around privacy, safety, compliance, and data infrastructure. There is also cannibalization risk. Without fast monetization of AI applications, hyperscalers, large cloud service providers that provide computing and storage at enterprise scale, might still cap their spending on either traditional servers or opex (e.g., headcount). While we don’t know how long or how severe the budget cannibalization will impact general server demand, for the long-term AI should be an incremental driver for datacenter demand. Lastly, CoWoS (back-end packaging) is the key bottleneck now, and TSMC needs to double its capacity in 2024 from the current level to meet the demand growth.

A quality growth approach to investing in AI

While revolutionary changes can seem to happen overnight in technology, it can take a long time for practical applications to play out. In the short term, the market may be overestimating the potential for some companies and the disruption to others. We remain steadfast in our research around generative AI, and we expect portfolio holdings to be users and beneficiaries. AI has generated excitement and expectations for a burst of growth in hardware companies geared towards building out server capacity, although these tend to be more capital-intensive, cyclical businesses and thus more volatile. We seek companies with predictable, sustainable earnings growth that are positioned to benefit from structural shifts.

1. Large language models (LLMs) are a leap forward in a computer’s ability to understand language, learning word definitions and rules of grammar.

2. AI revenues for Microsoft could add up to $20 Billion: ~$11B Office, ~$1B GitHub, ~$2B other Copilots, ~$6B Azure

3. HBM: high-performance memory (3D-stacked DRAM architecture) with high bandwidth and less power required to move data. HBM is like a super-wide highway that lets more data traffic flow at once, and it does this using less energy, which is a bonus because it saves power.

4. AI server demand is expected at 35-40% compound annual growth rate (CAGR) and HBM market growth is expected at 60%+ CAGR

Important Information: Past performance is not a guarantee of future results. The companies presented herein for discussion purposes only and should not be viewed as a reliable indicator of the performance or investment profile of any composite or client portfolio. Information provided as a means of demonstrating our investment processes and for further elaboration on the subject matter under discussion.

References to holdings and/or other companies should not be considered a recommendation to purchase, hold, or sell any security. No assumption should be made as to the profitability or performance of any security associated with them. There is no assurance, as of the date of publication, the securities referenced as held have not been sold or repurchased. Additionally, it’s noted securities discussed do not represent all of the securities purchased, sold, or recommended for the period referenced. Further, the reader should not assume that any investments identified were or will be profitable or that any investment recommendations or decisions we make in the future will be profitable.

Any projections or forward-looking statements regarding future events or the financial performance of countries, markets and/or investments are based on a variety of estimates and assumptions. There is no assurance that the assumptions made in connection with such projections will prove accurate, and actual results may differ materially. The inclusion of forecasts should not be regarded as an indication that Vontobel considers the projections to be a reliable prediction of future events and should not be relied upon as such. Vontobel reserves the right to make changes and corrections to the information and opinions expressed herein at any time, without notice.